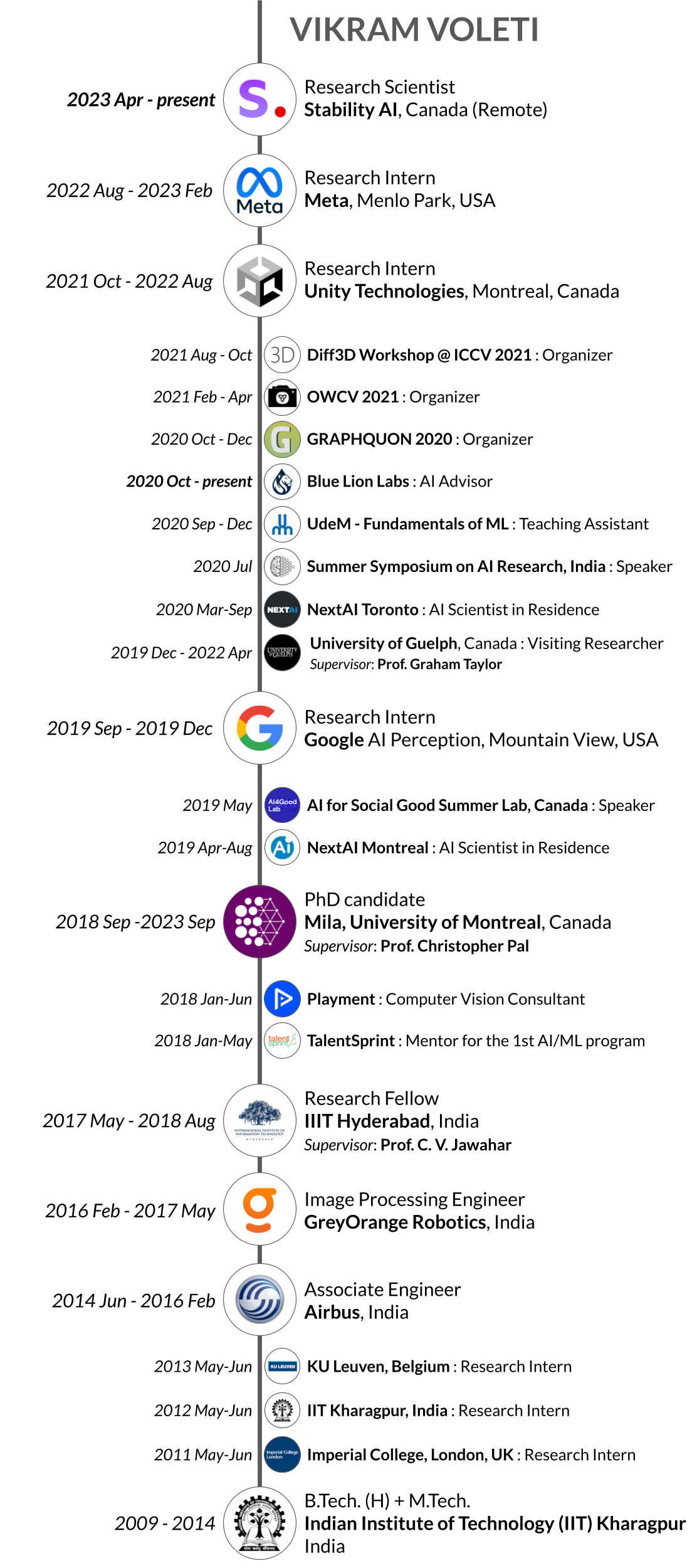

CURRENT

April 2023 — present

Stability AI - Research Scientist

Canada (Remote)

I lead AI research and development on generating 3D objects, images, videos from text

EDUCATION

2009 — 2014

Indian Institute of Technology (IIT), Kharagpur, India

Dual Degree (B.Tech. (H) + M.Tech.) in Electrical Engineering

with master’s specialization in Instrumentation and Signal Processing

EXPERIENCE

April 2023 — present

Stability AI - Research Scientist

Canada (Remote)

I lead AI research and development on generating 3D objects, images, videos from text

2018 — 2023

Mila, University of Montreal - Ph.D. student

Montreal, Canada

- Image generation using Multi-Resolution Continuous Normalizing Flows

- Image generation using Non-Isotropic Denoising Diffusion Models

- 3D animation using neural inverse kinematics with 3D human pose prior

- Video prediction using Neural ODEs

- Video prediction, generation, interpolation using Masked Conditional Video Diffusion models

August 2022 — February 2023

Meta - Research Intern

Menlo Park, California, USA

I worked with the AI4AR team at Meta, hosted by Yashar Mehdad. • Led the technology development for generating 3D objects, videos from text; dreamfusion, NeRF • Applied expertise at neural graphics for 3D rendering; implemented hands-on in PyTorch • International AI team; technology transitioned into a Meta end product, adopted by other teams

October 2021 — August 2022

Unity Technologies - MITACS Research Intern

Montreal, Canada

I am a MITACS Research Intern with the DeepPose team at Unity Labs, hosted by Boris Oreshkin. I worked on 3D human pose estimation and inverse kinematics from videos.

| SMPL-IK - Learned Morphology-Aware Inverse Kinematics for AI Driven Artistic Workflows, Vikram Voleti, Boris N. Oreshkin, Florent Bocquelet, Félix G. Harvey, Louis-Simon Ménard, Christopher Pal - SIGGRAPH Asia 2022 |

August 2021 — October 2021

Diff3D workshop @ ICCV 2021- Organizer

Canada

I was a co-organizer of the Differentiable 3D Vision and Graphics workshop at ICCV 2021.

February 2021 — April 2021

OWCV 2021, Canada - Organizer

Canada

I was a co-organizer of the inaugural Ontario Workshop on Computer Vision 2021.

October 2020 — March 2025

Blue Lion Labs - AI Advisor

Waterloo, Canada

I advise Blue Lion Labs, an early-stage startup that provides technology to automatically monitor different organisms in water using machine learning.

October 2020 — December 2020

GRAPHQUON 2020, Canada - Organizer

Canada

I was part of the organizing committee of GRAPHQUON 2020 (formerly MOTOGRAPH).

September 2020 — December 2020

Fundamentals of Machine Learning (IFT 6390), by Ioannis Mitliagkas - Teaching Assistant

University of Montreal, Montreal, Canada

March 2020 — September 2020

NextAI, Toronto - AI Scientist in Residence

Toronto, Canada

I was a mentor/consultant for multiple startups at NextAI. I assisted them in integrating artificial intelligence and machine learning into their product pipeline, and with long-term strategies in technology.

September 2019 — December 2019

Google - Research Intern

Mountain View, California, USA

I worked with the Google AI Perception team on deep models for large-scale video analysis for active speaker detection with Bryan Seybold and Sourish Chaudhuri.

Fundamentals of Machine Learning (IFT 6390), by Ioannis Mitliagkas - Teaching Assistant

University of Montreal, Montreal, Canada

April 2019 — August 2019

NextAI, Montreal - AI Scientist in Residence

Montreal, Canada

I was a mentor/consultant for 6 startups at NextAI. I assisted them in integrating artificial intelligence and machine learning into their product pipeline, and with long-term strategies in technology.

January 2018 — June 2018

Playment - Computer Vision Consultant

Bengaluru, India

Playment is a startup that offers annotation services for various computer vision tasks.

I was a consultant for the computer vision work at Playment. We focused on making more exhaustive and comprehensive semantic segmentation for autonomous driving using deep learning. We also worked at using classical computer vison as well as deep learning to solve various industrial problems including facial recognition, facial landmark detection, pedestrian detection.

January 2018 — May 2018

IIIT-Hyderabad & Talent Sprint - Mentor for Fundamentals of AI/ML

Hyderabad, India

I was a Mentor for the Foundations of Artificial Intelligence and Machine Learning certificate program by IIIT-H Machine Learning Lab and TalentSprint. I assisted in creating tutorials on machine learning, and mentor participants during lab sessions.

May 2017 — August 2018

International Institute of Information Techonology (IIIT) - Hyderabad - Research Fellow

Hyderabad, India — with Prof. C. V. Jawahar, Centre for Visual Information Technology, IIIT Hyderabad

- Video Translation

- Generated videos of movies and educational tutorials of Andrew Ng in Indian languages by morphing lip movement

- Experimented with GANs (Pix2Pix) to generate videos using original faces, new lip landmarks, and dubbed audio

- Assessor for Lipreader

- Built a visual speech recognizer (lipreader) to classify spoken words in videos, and an assessor to check if the lipreader’s output is correct by combining convolutional and recurrent neural networks

- Used the lipreader and assessor for self-training on unlabelled data, zero-shot learning on out-of-vocabulary words, and information retrieval

| Conference paper: Abhishek Jha, Vikram Voleti, Vinay P. Namboodiri, C. V. Jawahar, “Cross-Language Speech Dependent Lip-Synchronization”, in ICASSP 2019 [IEEE] |

| Workshop paper: Abhishek Jha, Vikram Voleti, Vinay P. Namboodiri, C. V. Jawahar, “Lip-Synchronization for Dubbed Instructional Videos”, in CVPR Workshop (FIVER), 2018 |

Feb 2016 — May 2017

GreyOrange Robotics - Image Processing Engineer

Gurgaon, India

GreyOrange Robotics is a multinational firm that designs, manufactures and deploys advanced robotics systems for automation at warehouses, distribution and fulfillment centres.

I was part of the Embedded Systems team. My job was to developed a computer vision module to perform video processing in real time for warehouse automation. We made an “Empty Carriage Detection System” (ECDS) for the “Cross-Belt Sorter” (CBS) that detects in real time whether a carriage in a conveyor belt has a packet on it or not, and relays the information to the server and mechanical systems. I also helped develop the embedded vision module in automated guided robots for warehouses, called “Butlers”.

A research paper based on some of the work has been accepted at the International Conference on Industrial Design Engineering, ICIDE 2017.

| Research paper: V. Voleti, P. Mohan, S. Gupta, J. Iqbal, “Simple Real-Time Pattern Recognition for Industrial Automation”, in Proc. International Conference on Industrial Design Engineering, 2017 |

July 2014 — Feb 2016

Airbus, India - Associate Engineer

Bengaluru, India

Airbus is a commercial aircraft manufacturer, and the largest aeronautics & space company in Europe. I worked in the Bangalore (India) office as part of the Avionics Software and Systems Testing group. I was involved in development and integration of avionics systems in the Flight Warning Computer (FWC) for aircrafts in the long-range family.

I was part of the Avionics Software and Systems Testing group. My job was to simulate signal-level changes in the Flight Warning Computer, such as adding new signals for new functionalities, re-routing signals through different paths. This was followed by rigorous testing of the FWC for correct operation. We designed the re-routing paths, as well as the tests required to ensure all the functionalities of the FWC run correctly. For all development, standard avionics coding guidelines (DO-178B) were followed.

THESIS PROJECTS

2013 — 2014

Image De-fencing using Microsoft Kinect — M.Tech. Thesis

IIT Kharagpur, India — under Prof. Rajiv Ranjan Sahay, Electrical Engineering

I worked on de-fencing of images using RGB-D data from Microsoft Kinect. We recorded images of scenes with fence-like occlusions and were successful in removing the fences from the scenes. We first recorded multpiple images of the same scene with slight spatial variation of the camera, and computed the approximate global shift among them. We then used loopy belief propagation to inpaint. A comparison of our technique and the erstwhile standards was made, and our method was demonstrated to be better.

A research paper based on this work has been published in IEEE Xplore in proceedings of the International Conference on Advances in Pattern Recognition, ICAPR 2015. A journal paper based on this work is under review at the International Journal of Computer Vision (IJCV).

| Research paper: S. Jonna, V. S. Voleti, R. R. Sahay, and M. S. Kankanhalli, “A Multimodal Approach for Image De-fencing and Depth Inpainting”, in Proc. Int. Conf. Advances in Pattern Recognition, 2015, pp. 1—6 |

| Journal paper: S. Jonna, S. Satapathy, V. S. Voleti, R. R. Sahay, “Unveiling the scene: A Multimodal Framework for Simultaneous Image Disocclusion and Depth Map Completion using Computational Cameras”, International Journal of Computer Vision, 2017 | (rejected) |

| THESIS | Presentation | GitHub repository containing thesis, presentation, code files, and results |

2012 — 2013

Identification of Bilabial Consonants in Audio and Lip Closures in Video — B.Tech. Thesis

IIT Kharagpur, India — under Prof. Rajiv Ranjan Sahay, Electrical Engineering

I worked on the identification of bilabial consonants in video and audio. The goal was to measure the time offset between the two modes using corresponding time points where bilabials occur. I learnt C++ and the OpenCV library, and detected lip closures in video using the standard Viola-Jones face detector, and a novel algorithm for lip closure detection. I trained a Gaussian Mixture Model in MATLAB on the MFCC features of bilabials in the speech signals of different speakers. A correlation was drawn between the time points of bilabials in audio and video.

| THESIS | Presentation | GitHub repository containing thesis, presentation, code files, and results |

PAST INTERNSHIPS

Summer 2013

KU Leuven, Belgium

Supervisor: Prof. Ingrid Verbauwhede, Computer Security & Industrial Applications, ESAT

Implementation of Carry-Free Arithmetic Operations in FPGA

I worked on the carry-free implementations of arithmetic operations of addition, subtraction and multiplication. Binary numbers are first converted to a recoded digit format that eliminates carry propagation. I designed the truth tables for this conversion, as well as subsequent addition, subtraction and multiplication. I then simplified the circuits into Product-of-Sums form, and coded them in Verilog. The time taken by these circuits were compared with standard implementation.

A single-author research paper based on this work has been written.

| Research paper: V. Voleti, “Carry-Free Implementations of Arithmetic Operations in FPGA” |

| Report | Presentation | GitHub repository containing report and presentation |

Summer 2012

IIT Kharagpur, India

Supervisor: Prof. Aurobinda Routray, Electrical Engineering

Fingertip Gesture Recognizer using HMMs

I first implemented Hidden Markov Models (HMM) in MATLAB from scratch, and verified the implementation outputs with those of standard implementation. I then made a simple gesture recognizer in MATLAB using HMMs.

| Report | Presentation | GitHub repository containing report, presentation, code files, and results |

Summer 2011

Imperial College, London, UK

Supervisor: Prof. Peter Cheung, Electrical and Electronic Engineering

Measurement of Intra-die Power Variation in Sub-nm FPGA’s

I experimented with an FPGA, and measured the power consumption among the LookUp Tables (LUTs) within it. An automated workflow for the measurement of power across the FPGA was made, by first implementing a circuit in each LUT, measuring the power on an oscilloscope using the JTAG terminals on the FPGA, recording the oscilloscope’s readings in MATLAB, and plotting graphs from MATLAB.

| Presentation | GitHub repository containing presentation, certificate, and recommendation letter from Prof. Peter Cheung |